Introduction

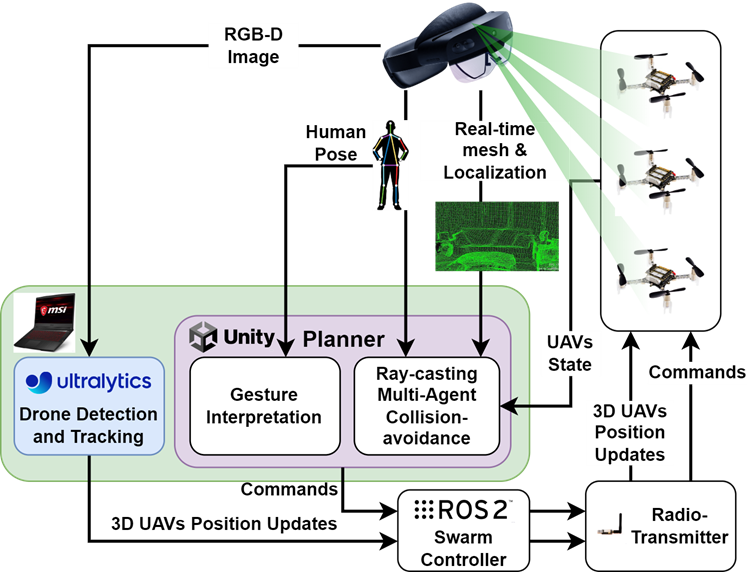

This project was done in the 3D Vision course at ETH with a team of four. We built a system that enabled real-time gesture control of two drones with collision avoidance between the drones and the environment. The results are included below.

System architecture

Joint Hololens-UAV SLAM

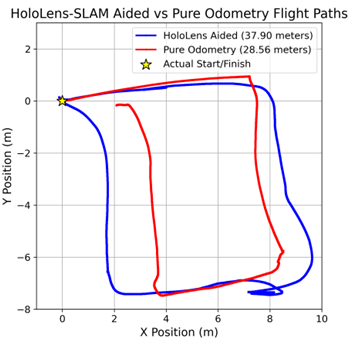

Updating the drone state estimate using the Hololens' SLAM eliminates the drift in the drone's state estimate. This can be seen in the images below. The red track shows the path the drone thinks it has flown using its own odometry without the Hololens' SLAM, the blue track is the path the drone has actually flown (return to takeoff position).