Introduction

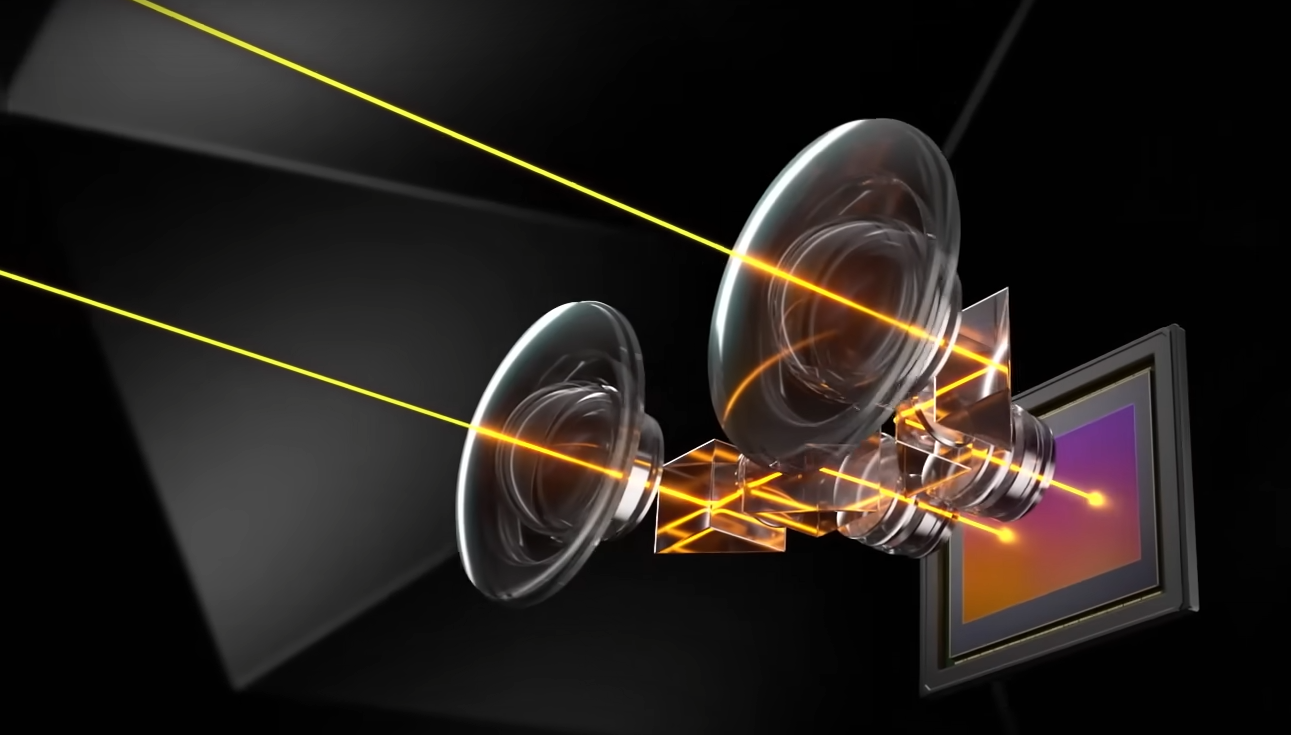

At Bladesense we push the boundaries of reality capture in order to apply it in the aerospace industry. The current state of the art in reality capture is moving ever more in the direction of full light field reconstruction. This reconstruction is being approached from multiple angles: on the sensor side with virtual reality cameras and on the software side with inverse rendering algorithms such as (neural) radiance fields and optimization-based inverse path tracers. I think the future of reality capture is in virtual reality cameras with multiple complicated wide-angle lenses like these:

Because of their larger coverage, these devices allow for dramatically increased capture ergonomics. However, associated with this advantage is one main problem which is that they tend to create a lot of singularities in the traditional projective-geometry based reconstruction pipelines. This makes it obvious that in the future we will need better lens and light transportation modelling to reconstruct our world on the next level. To be at the forefront of this trend, I first wanted expose myself to the forward rendering part of this equation in order to understand what our models are capable of and where they fail, so I enrolled in the ETH Advanced Computer Graphics course. Here are some of the coolest renders I made in my own rendering engine using what I learned:

Lens Modelling

Below I show a set of images produced by the dual fisheye lens reality capture camera by Canon. Interestingly, these images are actually constructed by focusing two lenses on the same homogeneous image sensor.

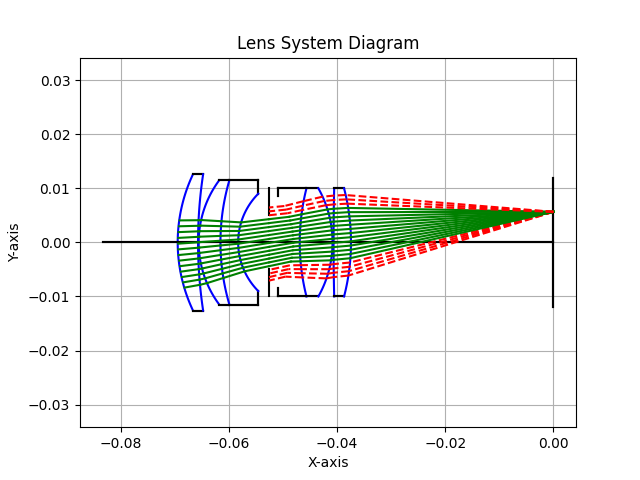

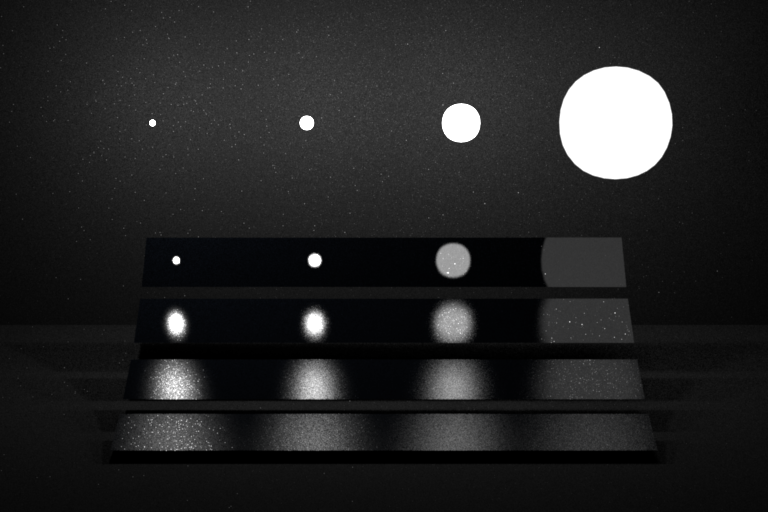

If we want any hope of using these images for accurate reconstruction we need a better lens model that accounts for a large range of optical effects (without reducing to the pinhole model as that introduces either unacceptable information loss or accuracy loss). In my computer graphics project I fully modelled a lens and aperture system, including internal reflections. The below image shows the sensor-to-exit path for a quad thick-lens and single aperture assembly:

This results in the full images as shown below which showcase two scenes with high dynamic range and specular effects. These are qualitatively similar to the canon images and show organic vignetting, distortion, depth of field, aperture effects (customisable, here a pentagon) and specular inter-lens effects (not all of them sampled as efficiently, however).

One place where I did have to cheat is on the aperture diffraction effects. These effects can’t be rendered using traditional path tracers as the quantum effects that cause them are not modelled by the assumptions of geometric optics. I made a simple model of these effects by convolving my before-aperture radiance images with a unit impulse lens-flare which is generated by realflare. Convolving images in this way rests on another linearity assumption which is actually satisfied by Fraunhofer’s diffraction in the far-field limit. However, this assumption disconnects the aperture diffraction effects from the rest of the lens system and hence distortions by the final two lenses are not present on the flares as can be seen below.

Light Transportation

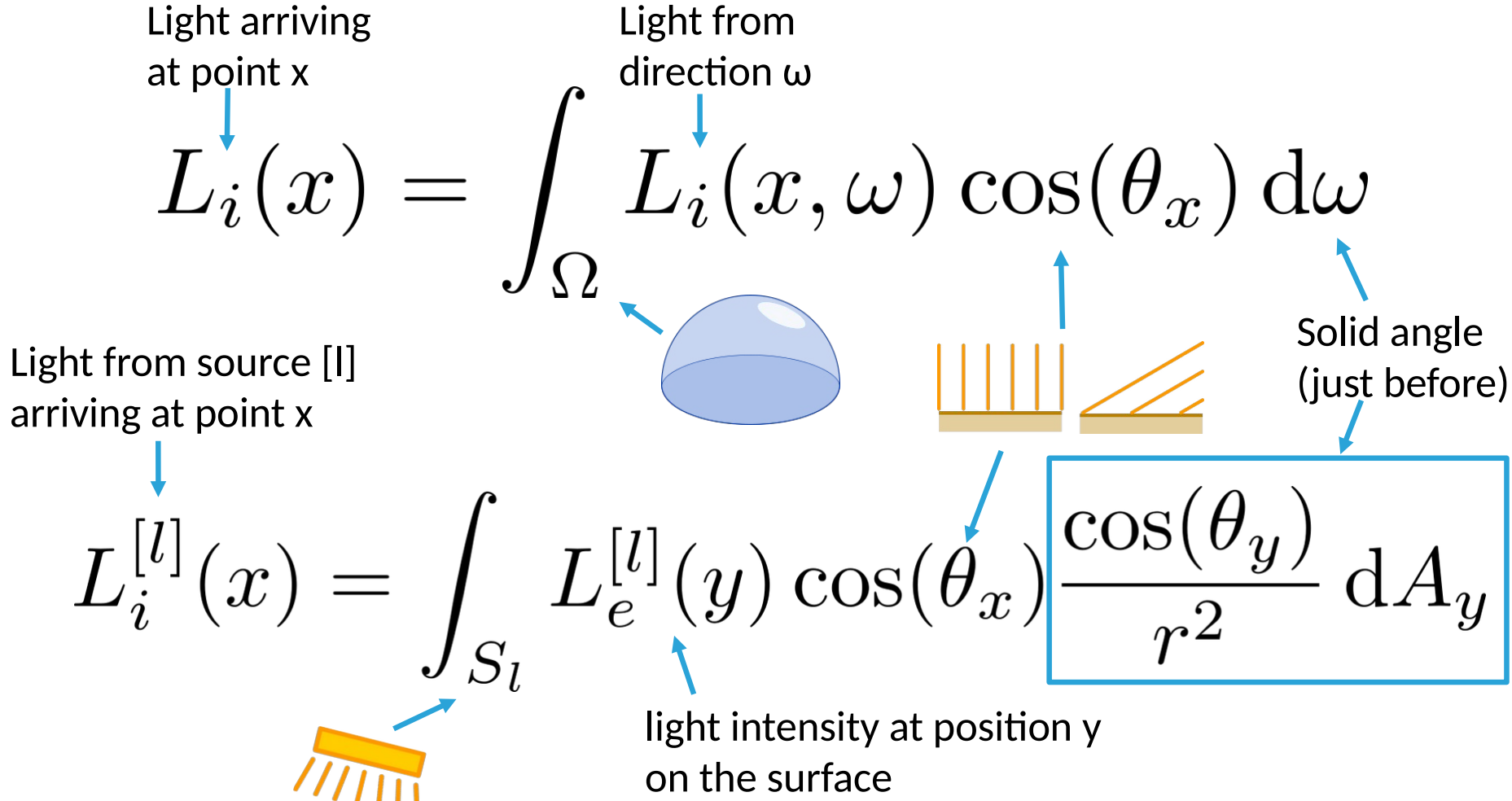

With my eye on future inverse rendering, I also dove into traditional light transportation modelling. The current computer graphics approaches bascially model this problem using the assumptions of geometric optics. Through a very long path with lots of assumptions this approach reduce the electromagnetic wave equations into the famous rendering equation as shown below:

I will go through a quick list of the coolest features I implemented in my renderer.

Volumetric Path Tracing

Some materials have more complicated interactions with light than just reflection, absorption and refraction. We call these participating media and we solve their models using volumetric path tracing. Below you can see a not-entirely translucent sphere and light scattering in an foggy environment.

The approach I implemented can deal with different types of scattering, including forward and backward scattering.

Multiple Importance Sampling

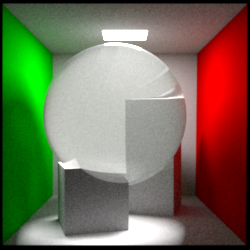

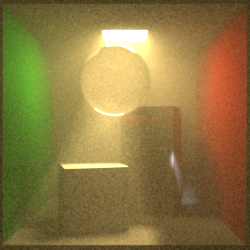

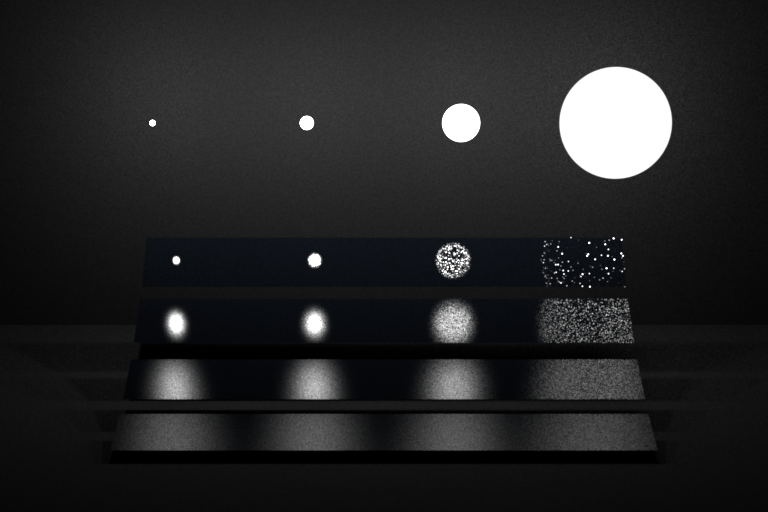

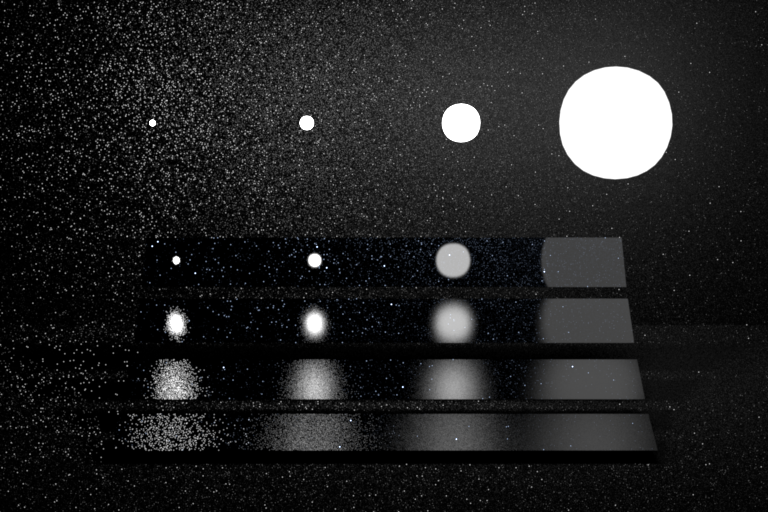

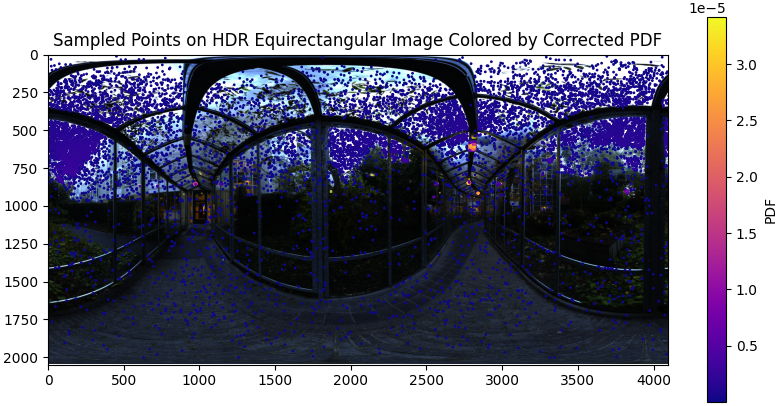

Some approaches to solving the rendering equations are better than others in different situations. In the image below you see different methods succeeding in different parts on the scene. The right image is a combination of the two methods, which tries to use the best of the approaches in the situation it is most applicable. This is called multiple importance sampling.

Here we importance sample different parts of an environment map according to how much light there is in that part of the enviroment.

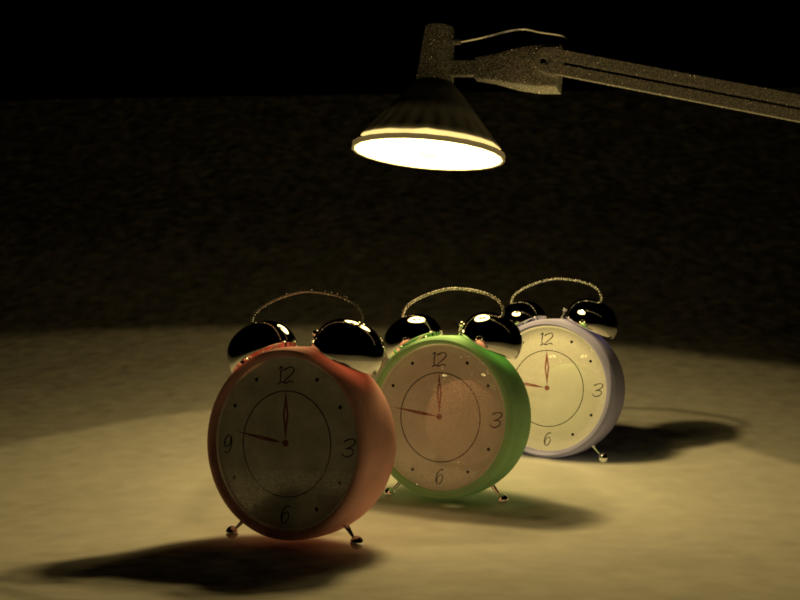

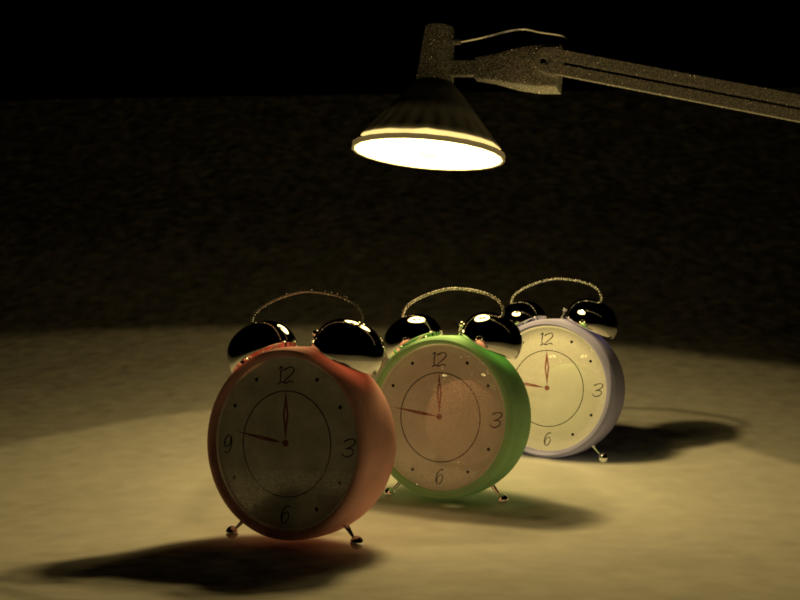

Using multiple importance sampling path tracing you can already make some more complicated images.

Photon Mapping

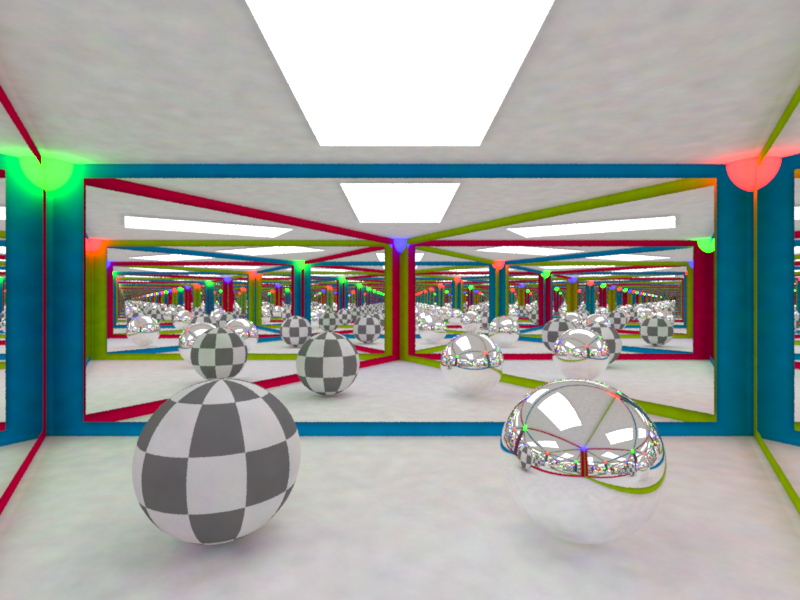

However, some scenes are really hard to solve using path tracing. In these cases we can use photon mapping. This is (most of the times) a biased approach that uses more memory than path tracing, but can be much faster and more accurate in some cases.

State of the art research by Nvidia merges photon mapping and path tracing.